Think Diffusion! Think Probability: A probablistic perspective of diffusion models

[Still writing the blog yet to complete]

|  |

Similarly, arbitrary noise is added to the image, and the goal of the model is to predict the added noise. However, if very little noise is added, the model will simply learn to copy the color in the next pixel. On the other hand, if too much noise is added at once, the context required for learning will be lost. Therefore, diffusion models are trained with a noise schedule. Small amounts of noise are added at each step, and then we learn $f(x_0 + n_t,t) = x_0$, where $n_t$ is the noise added till time step $t$.

We can also think of generative modeling as learning a transformation function that goes from a known distribution to the unknown. For example, we want to sample from a Normal distribution. We first sample from a uniform distribution and then apply the inverse CDF of the Normal to get the new random variable whose distribution is Normal (side not: Inverse cdf of normal disritbution does not have a closed form. So polynomial approximation is used in all the modern libraries [7])

We can express this as follows: for a random variable $z$ with a known distribution (uniform in our example), we learn a function $f$ (the inverse CDF of the distribution we want to sample from). Then, we sample $x$ such that $x = f(z)$, where $z \sim U[0,1]$. Since we have no knowledge of the inverse CDF of an unknown sampling process, we approximate it with a neural network. This will be the common theme in Deep Learning, if you are not sure on the function replace it with neural network and learn it with samples.

Suppose if we want to generalise sampling from gaussian instead of uniform mentioned above, we can apply $y = \phi_{unk}^{-1}(\phi_n(x))$, where $x \sim \mathcal{N}(0,1)$ and $\phi_n(\cdot)$ is cdf of unit normal. So our goal is to learn $\hat{f} = \phi_{unk}^{-1}(\phi_n(\cdot))$

We will be covering VAE, score and Denoising diffusion (DDPM) in this blog. The overarching idea is the same from the three techniques. We will first cover base VAE, and extend it to higerarchial vae. and then show that score based models are higerarchial VAEs with a fixed functional form for forward process given by $f(t)$ and $g(t)$ and the solution is equivalent to solving an SDE. DDPM is a simpler version of score modelling by fixing $f(t) = \beta_t$ as mentioned in [8]

VAE

We will explain VAE and

Diffusion Models

Diffusion models have been extensively studied, and there is a lot of literature available to learn from [1 - 4]. Generally, diffusion is explained theoricatlly and directly moved to a complex problems. However, in this blog we try to explain diffusion on toy problems.

First, Can we generate the samples from the 8-mode gaussian ( pdf of the distribution is as described below). As the data is 2 diamensional it is easy to visualise and understand the diffusion process. Then we move onto generating digits with a respective color. Then given a style of a digit and color of the digit model should try to generate such a digit. Once we are able to solve the problem of generating a digit with a color.

Theory of Diffusion

In this section, we briefly cover what is included in score.py. This section contains some math. We will try to cover the preliminaries and do our best to explain things visually.

We add noise in a perticular way. $x_t = \sqrt{\alpha_t}x_0 + \sqrt{1- \alpha_t}z$, where $z \sim \mathcal{N}(0,1)$

Diffusion as VAE

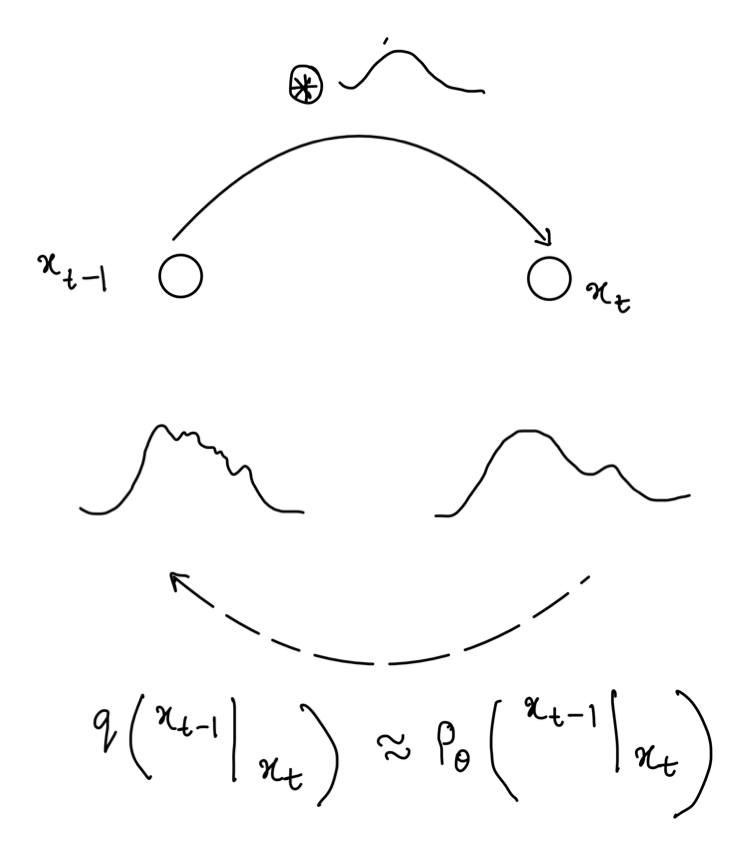

The image dipicts how VAE and diffusion are connected. In VAE we go from Image domain to the latent domain. We enforce the latent to be gaussian $\mathcal{N}(0,1)$ by constrianing latent with KL divergence. Then the decoder learns the inverse transformation of it. This notion of VAE is very similar to

We can also see this probability matching for VAEs and Diffusion, we will

The image dipicts how VAE and diffusion are connected. In VAE we go from Image domain to the latent domain. We enforce the latent to be gaussian $\mathcal{N}(0,1)$ by constrianing latent with KL divergence. Then the decoder learns the inverse transformation of it. This notion of VAE is very similar to

We can also see this probability matching for VAEs and Diffusion, we willConditional generation

They however used VQVAE intead of VAE. The rest architecture will remain the same.

References

[1] Chan, S. H. (2024). Tutorial on Diffusion Models for Imaging and Vision. arXiv preprint arXiv:2403.18103. https://arxiv.org/pdf/2403.18103.pdf.

[2] Weng, Lilian. (Jul 2021). What are diffusion models? Lil’Log. https://lilianweng.github.io/posts/2021-07-11-diffusion-models/.

[3] Vishnu Boddeti. (2024). Deep Learning. https://hal.cse.msu.edu/teaching/2024-spring-deep-learning/

[4] Arash Vahdat. et al. (2022). CVPR. https://cvpr2022-tutorial-diffusion-models.github.io/

[5] Nike Invincible 3. https://www.nike.com/t/invincible-3-mens-road-running-shoes-6MqQ72/DR2615-007

[6] Ruiz. et al. (2022). DreamBooth: Fine Tuning Text-to-image Diffusion Models for Subject-Driven Generation. https://dreambooth.github.io/

[7] Khanh Nguyen, MTI Technology. https://medium.com/mti-technology/how-to-generate-gaussian-samples-347c391b7959

[7] Khanh Nguyen, MTI Technology. https://medium.com/mti-technology/how-to-generate-gaussian-samples-347c391b7959