Hello! I am a final-year Masters Student in the CSE Department at Michigan State University pursuing my master’s degree under the supervision of Dr. Vishnu Boddeti. I recently defended my thesis on Compositionality in Diffusion Models. I want to thank my thesis committee— Dr. Xiaoming Liu, Dr. Yu Kong, and Dr. Felix Juefei Xu— for their guidance and help. I am extremely lucky to have amazing colleagues at Human Analysis Lab. I hold a bachelor’s degree from IIT, Guwahati. I’m proud of my research experience, including the attempts that didn’t work out. You can read more about them on my blog. After persistent work on fairness, generalization, and spurious correlations, I got my first ICLR paper on CoInD: Enabling Logical Compositions in Diffusion Models.

Collaborations

Collaborations

I'm always open to collaborating with fellow researchers and industry partners. While my primary research is on compositionality and generalization in generative models, I'm open to exploring new topics and innovative ideas.

Publications

Publications

CoInD: Enabling Logical Compositions in Diffusion Models

Sachit Gaudi, Gautam Sreekumar, Vishnu Boddeti

ICLR, 2025

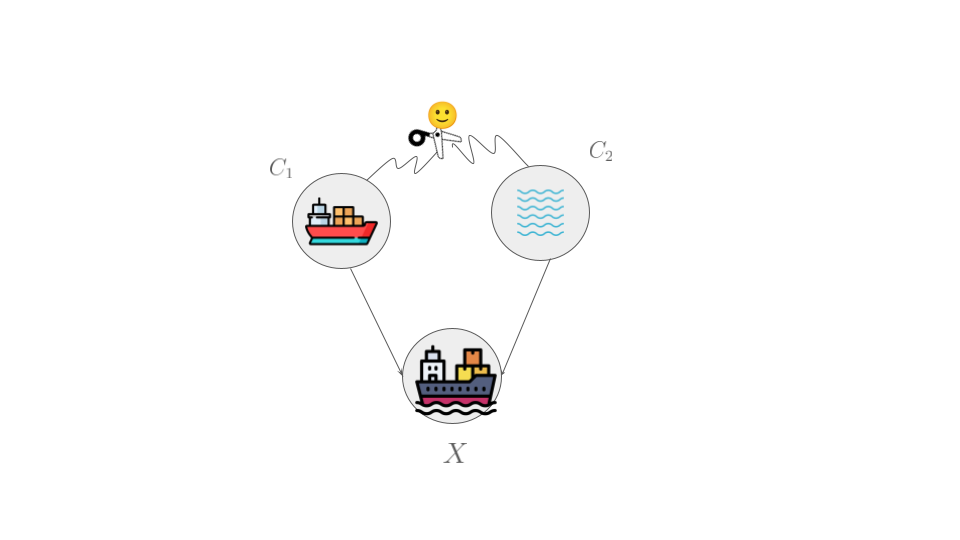

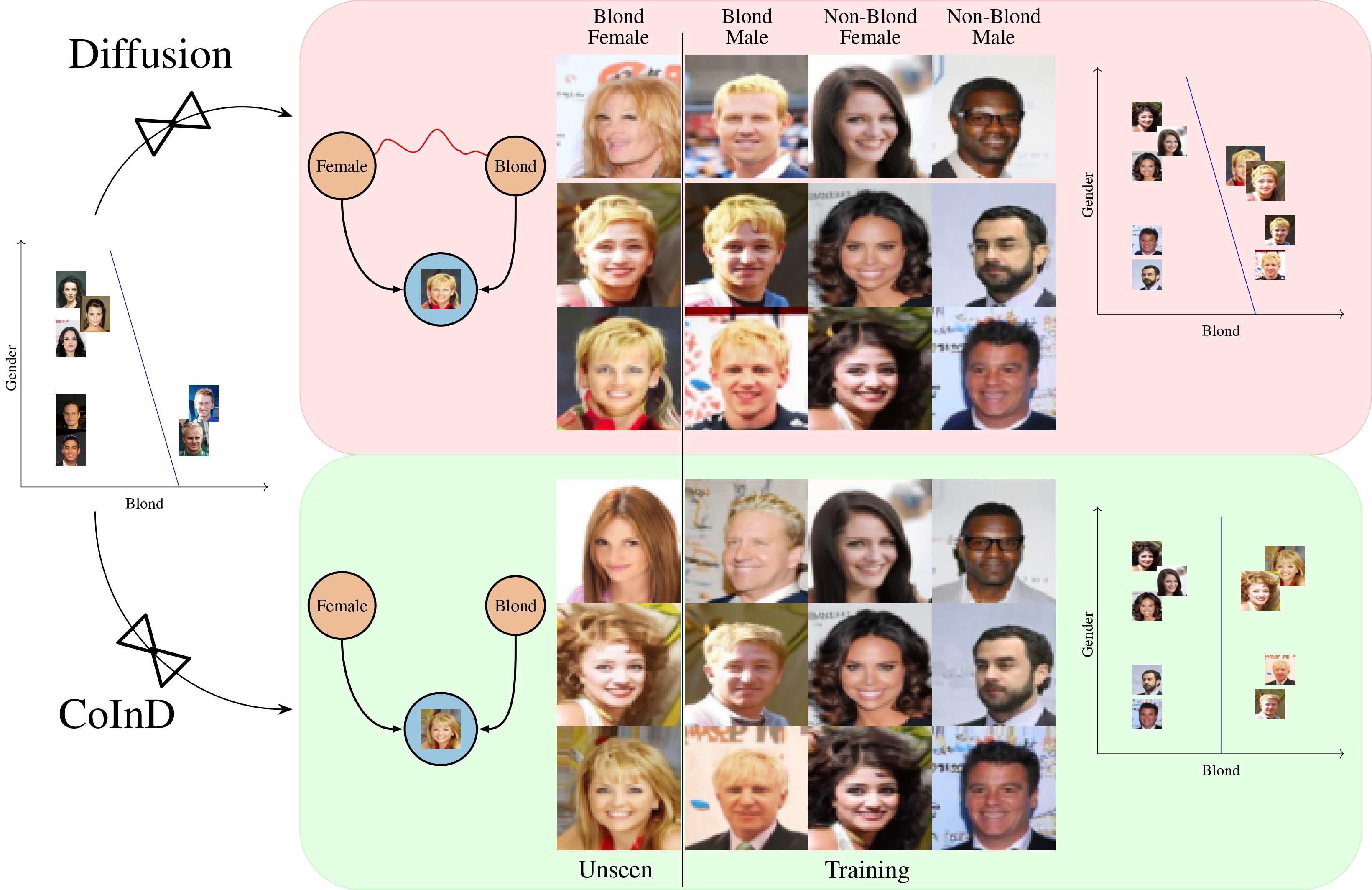

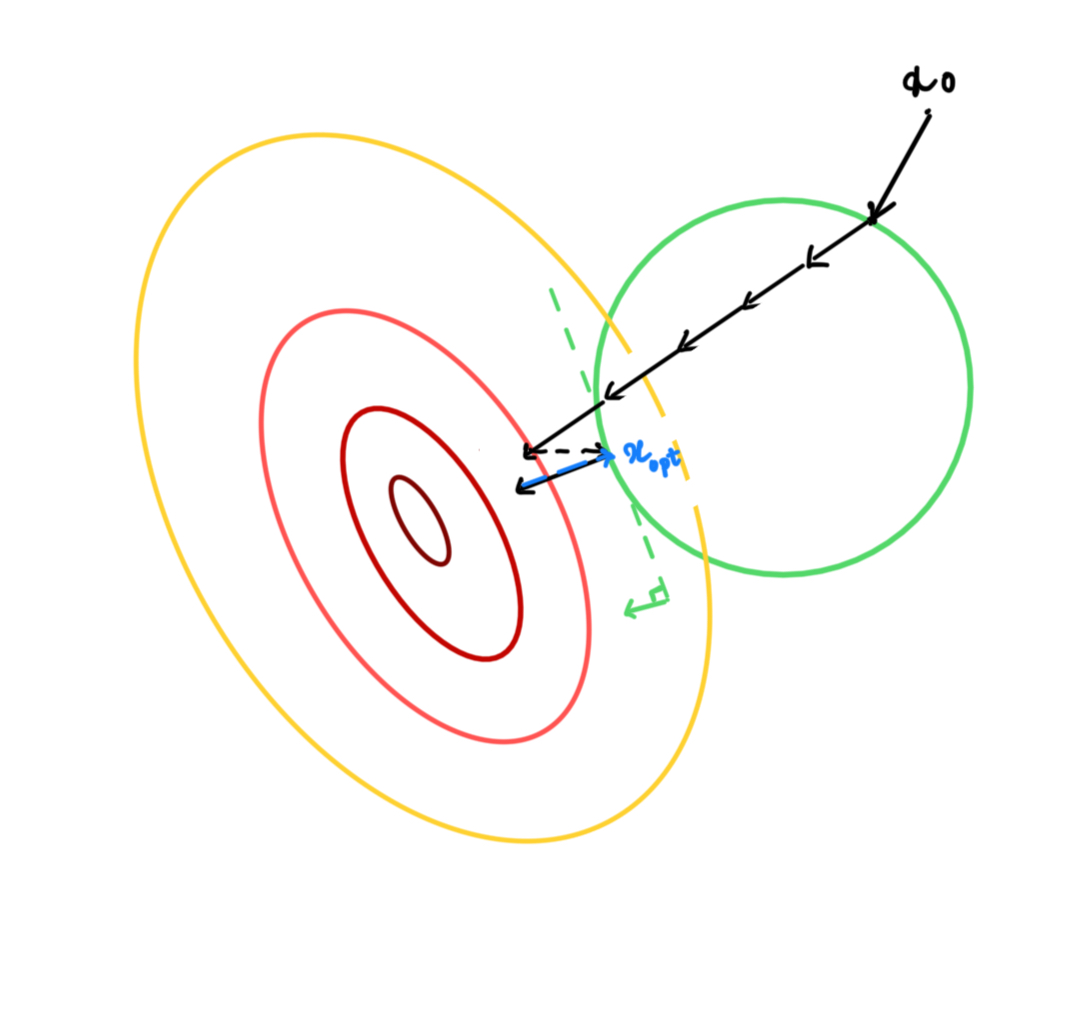

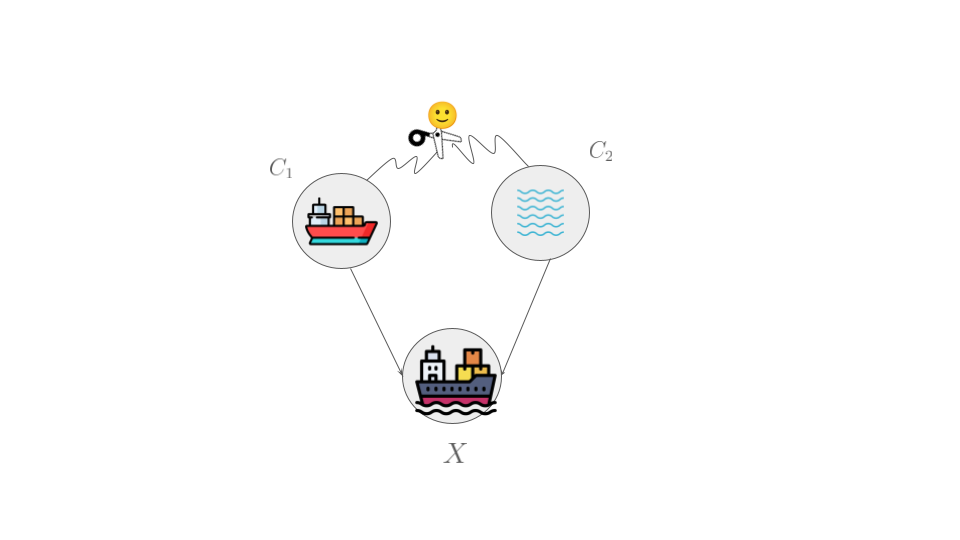

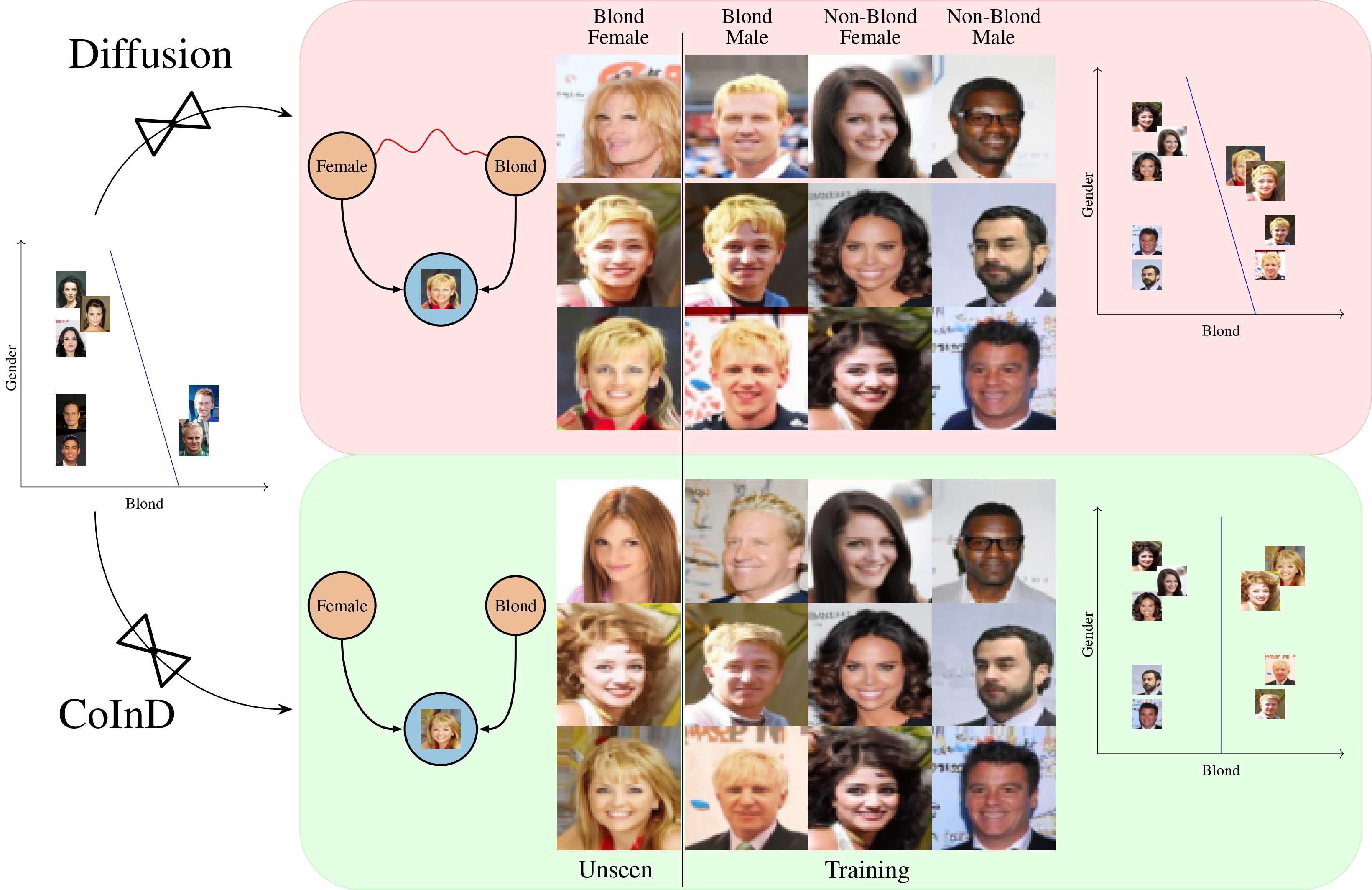

How can we learn generative models to sample data with arbitrary logical compositions of statistically independent attributes? The prevailing solution is to sample from distributions expressed as a composition of attributes' conditional marginal distributions under the assumption that they are statistically independent. This paper shows that standard conditional diffusion models violate this assumption, even when all attribute compositions are observed during training. And, this violation is significantly more severe when only a subset of the compositions is observed. We propose CoInD to address this problem. It explicitly enforces statistical independence between the conditional marginal distributions by minimizing Fisher’s divergence between the joint and marginal distributions. The theoretical advantages of CoInD are reflected in both qualitative and quantitative experiments, demonstrating a significantly more faithful and controlled generation of samples for arbitrary logical compositions of attributes. The benefit is more pronounced for scenarios that current solutions relying on the assumption of conditionally independent marginals struggle with, namely, logical compositions involving the NOT operation and when only a subset of compositions are observed during training.

@inproceedings{gaudi2025coind,

title={CoInD: Enabling Logical Compositions in Diffusion Models},

author={Sachit Gaudi and Gautam Sreekumar and Vishnu Boddeti},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=cCRlEvjrx4}

}

Compositional World Knowledge leads to High Utility Synthetic data

Sachit Gaudi, Gautam Sreekumar, Vishnu Boddeti

SynthData, ICLRW, 2025

Machine learning systems struggle with robustness, under subpopulation shifts. This problem becomes especially pronounced in scenarios where only a subset of attribute combinations is observed during training—a severe form of subpopulation shift, referred as compositional shift. To address this problem, we ask the following question: Can we improve the robustness by training on synthetic data, spanning all possible attribute combinations? We first show that training of conditional diffusion models on limited data lead to incorrect underlying distribution. Therefore, synthetic data sampled from such models will result in unfaithful samples and does not lead to improve performance of downstream machine learning systems. To address this problem, we propose COIND to reflect the compositional nature of the world by enforcing conditional independence through minimizing Fisher’s divergence between joint and marginal distributions. We demonstrate that synthetic data generated by COIND is faithful and this translates to state-of-the-art worst-group accuracy on compositional shift tasks on CelebA.

@inproceedings{ gaudi2025compositional, title={Compositional World Knowledge leads to High Utility Synthetic data}, author={Sachit Gaudi and Gautam Sreekumar and Vishnu Boddeti}, booktitle={Will Synthetic Data Finally Solve the Data Access Problem?}, year={2025}, url={https://openreview.net/forum?id=e9i1Frx5Kc} }

☕ Apart from Research

🧑🏫 I teach programming (CSE 232) at Michigan State University.

⛳ I enjoy running on a rare sunny day in East Lansing, MI. On a gloomy day, I sit down with a sip of coffee ☕ and Leetcode.

💡 I love building products. Currently working on an image editing tool.

New Posts

New Posts

Collaborations

Collaborations Publications

Publications